How we stop repeat pest problems

We don’t just treat and leave. We use a 3 step process to solve the pest problems.

Inspection

We find where pests are getting in and what conditions are driving activity in your home.

Fix Access

We identify priority entry points and recommend sealing or exclusion where it matters most.

Targeted Treatment

We target and exterminate the pests at the source. Saving you time and money.

Local Data, Not Guesswork

Our 90 field study on rodent activity during the winter in Central Mass

We don’t just claim our sensors work.

We prove it with real data. Our 90-day winter study tracked rodent activity across Sterling, MA from October 2025 through January 2026.

The findings:

- Rodents stay active even at -4°F (just 56% less at coldest temps)

- Activity increases during storms, not before them

- Deep snow (6+ inches) reduces surface movement by 48%

- Peak activity shifts from 9 PM to 6 PM on freezing days This is why our monitoring catches problems weeks before traditional monthly trap checks.

Zero guesswork.

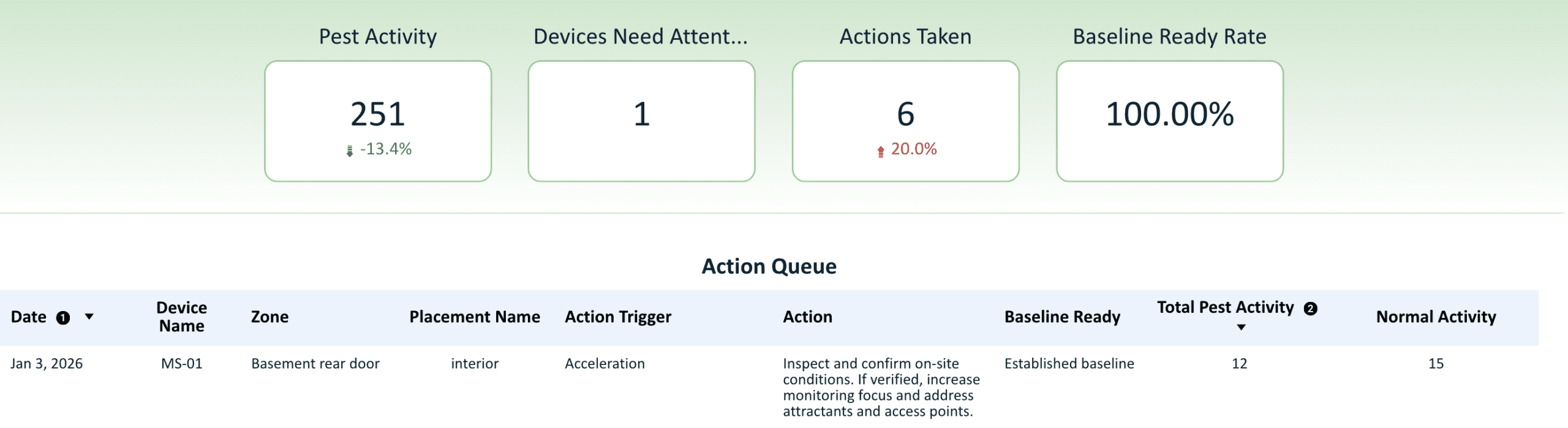

Instead of waiting for sightings, RodentRX tracks activity between visits. You get a detailed report. We use early signals to respond sooner and prioritize action.

What you get

How we decide

We track the pests on your property so you can sleep well.

Pest Activity and Weather Index

31 day aggregation of digital pest monitoring data across customer properties. All values are scaled for visualization.

Built for Worcester County Homes

Older basements, fieldstone foundations, and seasonal pressure create predictable entry points. From Northbridge to Gardner we have you covered. Our inspections focus on access, moisture, and repeat drivers not guesswork.

We offer both residential and commercial services

For Commercial Facilities Monitoring + Documentation You Can Stand Behind

Frequently Asked Questions

Do you offer residential pest control?

Yes, we offer a full lineup of pest control services. From mice to termites we have you covered.

Do you offer commercial services?

Yes, our services revolve around your compliance and risk mitigation.

What are your business hours?

Our hours for taking calls and emails is 6am to 10pm and we provide services at all hours of the night or day around your schedule.